Introduction

Have you ever wondered how you can create state-of-the-art sentence embeddings with Transformers and use them in downstream tasks like semantic textual similarity?

In this tutorial, you will learn how to build a vector-based search engine with sentence transformers and Faiss. If you want to jump straight into the code, check out the GitHub repo and the Google Colab notebook.

In the second part of the tutorial, you will learn how to serve the search engine in a Streamlit application deploy it with Docker and AWS Elastic Beanstalk.

Why build a vector-based search engine?

Keyword-based search engines are easy to use and work well in most scenarios. You ask for machine learning papers and they return a bunch of results containing an exact match or a close variation of the query like machine-learning. Some of them might even return results containing synonyms of the query or words that appear in a similar context. Others, like Elasticsearch, do all of these things — and even more — in a rapid and scalable way. However, keyword-based search engines usually struggle with:

- Complex queries or with words that have a dual meaning.

- Long queries such as a paper abstract or a paragraph from a blog.

- Users who are unfamiliar with a field’s jargon or users who would like to do exploratory search.

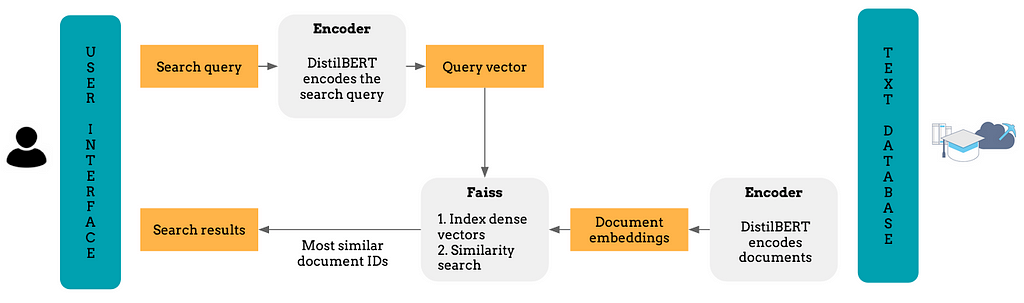

Vector-based (also called semantic) search engines tackle those pitfalls by finding a numerical representation of text queries using state-of-the-art language models, indexing them in a high-dimensional vector space and measuring how similar a query vector is to the indexed documents.

Indexing, vectorisation and ranking methods

Before diving into the tutorial, I will briefly explain how keyword-based and vector-based search engines (1) index documents (ie store them in an easily retrievable form), (2) vectorise text data and (3) measure how relevant a document is to a query. This will help us highlight the differences between the two systems and understand why vector-based search engines might give more meaningful results for long-text queries.

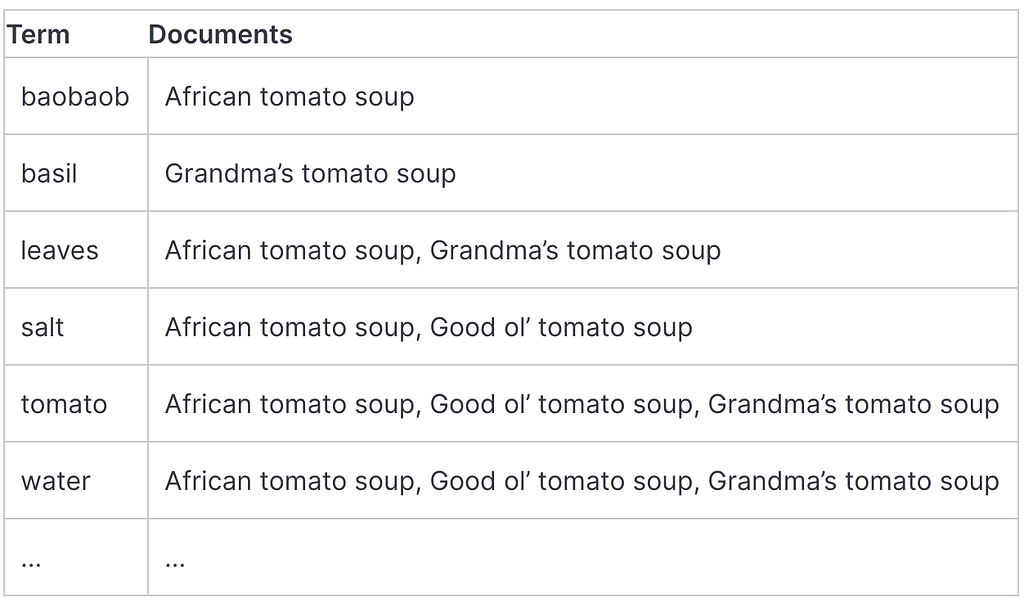

1. Keyword-based search engines

Let’s use an oversimplified version of Elasticsearch as an example. Elasticsearch uses a tokeniser to split a document into tokens (ie meaningful textual units) which are mapped to numerical sequences and used to build an inverted index.

In parallel, Elasticsearch represents every indexed document with a high-dimensional, weighted vector, where each distinct index term is a dimension, and their value (or weight) is calculated with TF-IDF.

To find relevant documents and rank them, Elasticsearch combines a Boolean Model (BM) with a Vector Space Model (VSM). BM marks which documents contain a user’s query and VSM scores how relevant they are. During search, the query is transformed to vector using the same TF-IDF pipeline and then the VSM score of document d for query q is the cosine similarity of the weighted query vectors V(q) and V(d).

This way of measuring similarity is very simplistic and not scalable. The workhorse behind Elasticsearch is Lucene which employs various tricks, from boosting fields to changing how vectors are normalised, to speed up the search and improve its quality.

Elasticsearch works great in most cases, however, we would like to create a system that pays attention to the words’ context too. This brings us to vector-based search engines.

2. Vector-based search engines

We need to create document representations that consider the context of the words too. We also need an efficient and reliable way to retrieve relevant documents stored in an index.

Creating dense document vectors

In recent years, the NLP community has made strides on that front, with many deep learning models being open-sourced and distributed by packages like Huggingface’s Transformers that provides access to state-of-the-art, pretrained models. Using pretrained models has many advantages:

- They usually produce high-quality embeddings as they were trained on large amounts of text data.

- It’s straightforward to fine-tune a model to your task.

These models produce a fixed-size vector for each token in a document. How do we get document-level vectors though? This is usually done by averaging or pooling the word vectors. However, these approaches produce below-average sentence and document embeddings, usually worse than averaging GloVe vectors.

To build our semantic search engine we will use Sentence Transformers that fine-tune BERT-based models to produce semantically meaningful embeddings of long-text sequences.

Building an index and measuring relevance

The most naive way to retrieve relevant documents would be to measure the cosine similarity between the query vector and every document vector in our database and return those with the highest score. Unfortunately, this is very slow in practice.

The preferred approach is to use Faiss, a library for efficient similarity search and clustering of dense vectors. Faiss offers a large collection of indexes and composite indexes. Moreover, given a GPU, Faiss scales up to billions of vectors!

Tutorial: Building a vector-based search engine with Sentence Transformers and Faiss

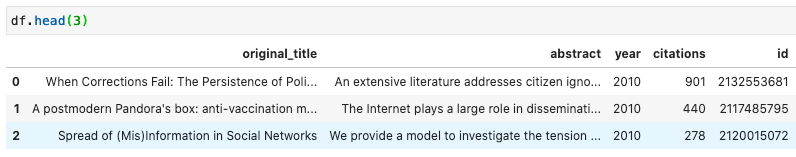

In this practical example, we will work with real-world data. I created a dataset of 8,430 academic articles on misinformation, disinformation and fake news published between 2010 and 2020 by querying the Microsoft Academic Graph with Orion.

I retrieved the papers’ abstract, title, citations, publication year and ID. I did minimal data cleaning like removing papers without an abstract. Here is how the data look like:

Importing Python packages and reading the data from S3

Let’s import the required packages and read the data. The file is public so you can run the code on Google Colab or locally by accessing the GitHub repo!

Vectorising documents with Sentence Transformers

Next, let’s encode the paper abstracts. Sentence Transformers offers a number of pretrained models some of which can be found in this . Here, we will use the distilbert-base-nli-stsb-mean-tokens model which performs great in Semantic Textual Similarity tasks and it’s quite faster than BERT as it is considerably smaller.

Here, we will:

- Instantiate the transformer by passing the model name as a string.

- Switch to a GPU if it is available.

- Use the `.encode()` method to vectorise all the paper abstracts.

It is recommended to use a GPU when vectorising documents with Transformers. Google Colab offers one for free! If you want to run it on AWS, check out my guide on how to launch a GPU instance on AWS for machine learning.

Indexing documents with Faiss

Faiss contains algorithms that search in sets of vectors of any size, even ones that do not fit in RAM. To learn more about Faiss, you can read their paper on arXiv or their .

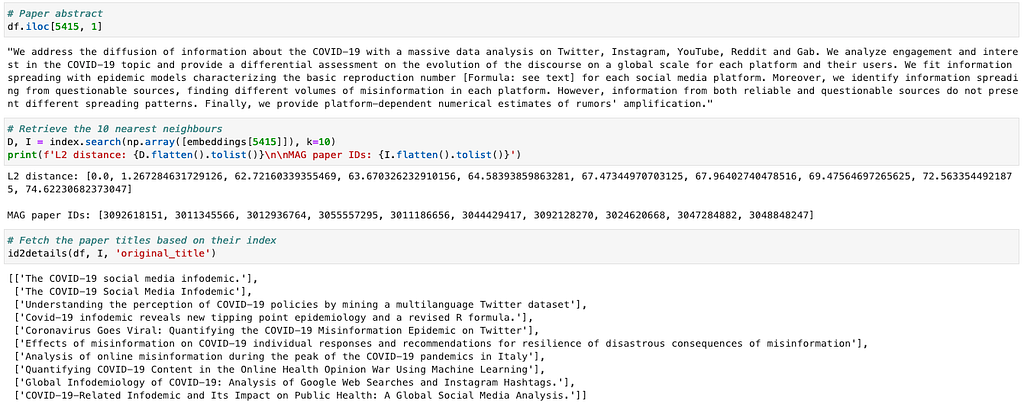

Faiss is built around the Index object which contains, and sometimes preprocesses, the searchable vectors. It handles collections of vectors of a fixed dimensionality d, typically a few 10s to 100s.

Faiss uses only 32-bit floating point matrices. This means we will have to change the data type of the input before building the index.

Here, we will use the IndexFlatL2 index that performs a brute-force L2 distance search. It works well with our dataset, however, it can be very slow with a large dataset as it scales linearly with the number of indexed vectors. Faiss offers fast indexes too!

To create an index with the abstract vectors, we will:

- Change the data type of the abstract vectors to float32.

- Build an index and pass it the dimension of the vectors it will operate on.

- Pass the index to IndexIDMap, an object that enables us to provide a custom list of IDs for the indexed vectors.

- Add the abstract vectors and their ID mapping to the index. In our case, we will map vectors to their paper IDs from Microsoft Academic Graph.

To test the index works as expected, we can query it with an indexed vector and retrieve its most similar documents as well as their distance. The first result should be our query!

Searching with user queries

Let’s try to find relevant academic articles for a new, unseen search query. In this example, I will query our index with the first paragraph of the Can WhatsApp benefit from debunked fact-checked stories to reduce misinformation?article that was published on HKS Misinformation Review.

To retrieve academic articles for a new query, we would have to:

- Encode the query with the same sentence-DistilBERT model we used for the abstract vectors.

- Change its data type to float32.

- Search the index with the encoded query.

For convenience, I have wrapped up these steps in the vector_search() function.

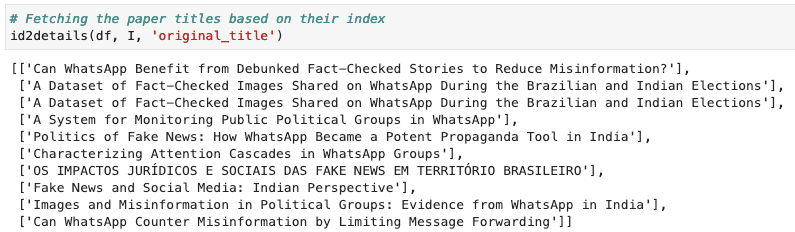

The article discusses misinformation, fact-checking, WhatsApp and elections in Brazil and India. We would expect our vector-based search engine to return results on these topics. By checking the paper title, most of the results look quite relevant to the our query. Our search engine works alright!

Conclusion

In this tutorial, we built a vector-based search engine using Sentence Transformers and Faiss. Our index works well but it’s fairly simple. We could improve the quality of the embeddings by using a domain-specific transformer like SciBERT which has been trained on papers from the corpus of semanticscholar.org. We could also remove duplicates before returning the results and experiment with other indexes.

For those working with Elasticsearch, Open Distro introduced an approximate k-NN similarity search feature which is also part of AWS Elasticsearch service. In another blog, I will dive into that too!

Finally, you can find the code on GitHub and try it out with Google Colab.

References

[1] Thakur, N., Reimers, N., Daxenberger, J. and Gurevych, I., 2020. Augmented SBERT: Data Augmentation Method for Improving Bi-Encoders for Pairwise Sentence Scoring Tasks. arXiv preprint arXiv:2010.08240.

[2] Johnson, J., Douze, M. and Jégou, H., 2019. Billion-scale similarity search with GPUs. IEEE Transactions on Big Data.

How to Build a Semantic Search Engine With Transformers and Faiss was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.